Ethernet is a family of frame-based computer networking technologies for (LANs). The name comes from the physical concept of the ether. It defines a number of wiring and signaling standards for the physical layer (Layer 1), through means of network access at the Media Access Control (MAC)/Data Link Layer, and a common addressing format.

Ethernet is standardized as IEEE 802.3. The combination of the twisted pair versions of Ethernet for connecting end systems to the network, along with the fiber optic versions for site backbones, is the most widespread wired LAN technology. It has been in use from around 1980 to the present, largely replacing competing LAN standards such as token ring, FDDI, and ARCNET. In recent years, Wi-Fi, the wireless LAN standardized by IEEE 802.11, is prevalent in home and small office networks and augmenting Ethernet in larger installations.

History of EthernetEthernet was originally developed at Xerox PARC in 1973–1975. Robert Metcalfe and David Boggs wrote and presented their "Draft Ethernet Overview" before March 1974. In March 1974, R.Z. Bachrach wrote a memo to Metcalfe and Boggs and their management, stating that "technically or conceptually there is nothing new in your proposal" and that "analysis would show that your system would be a failure." This analysis was flawed in that it ignored the "channel capture effect", though this was not understood until 1994. In 1975, Xerox filed a patent application listing Metcalfe and Boggs, plus Chuck Thacker and Butler Lampson, as inventors. In 1976, after the system was deployed at PARC, Metcalfe and Boggs published a seminal paper.

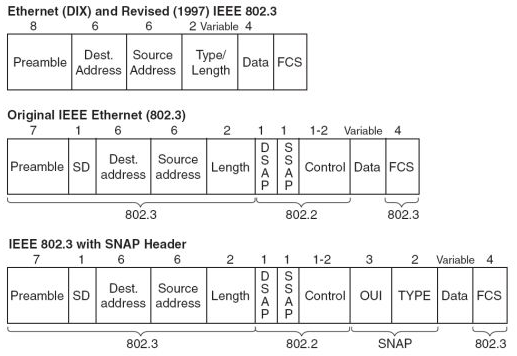

The experimental Ethernet described in that paper ran at 3 Mbps, and had 8-bit destination and source address fields, so Ethernet addresses were not the global addresses they are today. By software convention, the 16 bits after the destination and source address fields were a packet type field, but, as the paper says, "different protocols use disjoint sets of packet types", so those were packet types within a given protocol, rather than the packet type in current Ethernet which specifies the protocol being used.

Metcalfe left Xerox in 1979 to promote the use of personal computers and local area networks (LANs), forming 3Com. He convinced DEC, Intel, and Xerox to work together to promote Ethernet as a standard, the so-called "DIX" standard, for "Digital/Intel/Xerox"; it standardized the 10 megabits/second Ethernet, with 48-bit destination and source addresses and a global 16-bit type field. The standard was first published on September 30, 1980. It competed with two largely proprietary systems, token ring and ARCNET, but those soon found themselves buried under a tidal wave of Ethernet products. In the process, 3Com became a major company.

Twisted-pair Ethernet systems have been developed since the mid-80s, beginning with StarLAN, but becoming widely known with 10BASE-T. These systems replaced the coaxial cable on which early Ethernets were deployed with a system of hubs linked with unshielded twisted pair (UTP), ultimately replacing the CSMA/CD scheme in favor of a switched full duplex system offering higher performance.

Source:

Source: